AI in Healthcare

AI in Healthcare – Increasing trust in data-driven applications

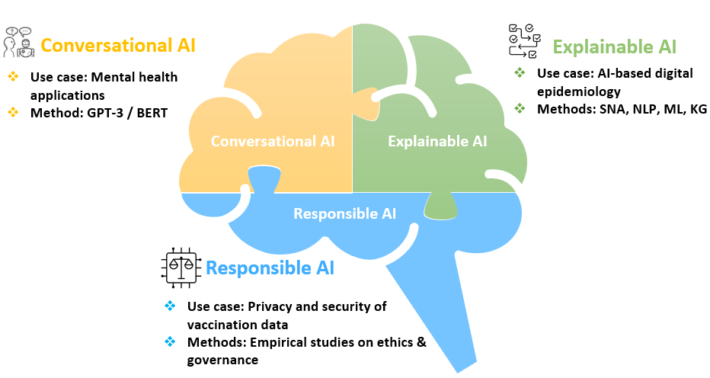

The habilitation project aims at increasing trust and reliability of the usage of AI in the healthcare sector. The focus is set on three use cases, each dealing with different aspects of trustworthiness.

Conversational AI

Reliability and validity are additional aspects contributing to (mis)trust in AI systems. Chatbots have been attracting a lot of attention from both science and industry. Basic Q&A chatbots have been successfully implemented (e.g., talking to an intelligent customer service agent). However, there is much more work needed to develop systems that can answer reliably and meaningfully in a way a human expert would.

As part of this research project, the second use case is in the field of conversational AI – the study of techniques for software agents that can engage in natural conversational interactions with humans (Ram et al., 2017). Focus is on developing a mental health application. The main motivation are the rising issues around tackling mental health which is expected to be even more relevant in the next decades due to the consequences of the Covid pandemic.

For this purpose, two language models are considered. One is the BERT (Bidirectional Encoder Representations from Transformers) developed and released by Google in 2018. It has 340 million parameters and requires fine tuning. GPT (Generative Pre-trained Transformer) and its latest release, GPT-3, is developed by Open AI, a research company based in San Francisco. GPT-3 models are the most advanced language models available today for understanding and generating natural language. The model has 175 billion parameters and generally requires minimal fine tuning. There are four models with different levels of power suitable for different tasks. Both BERT and GPT have their advantages and disadvantages. However, so far both have been successfully used for advanced NLP tasks such as text classification, translation, grammar correction, Q&A, summarization, and sentiment analysis.

Explainable AI

A major reason for distrusting the results of an AI system is that domain experts cannot understand how the machine learning model, used for the decision output, ultimately makes its decision. Explainable AI or XAI (also transparent AI or interpretable AI) is an AI in which the actions can be easily understood and analyzed by humans by providing an auditable record including all factors and associations related with a given prediction (Hagras, 2018).

In healthcare, explainability has been identified as a “requirement for clinical decision support systems because the ability to interpret system outputs facilitates shared decision-making between medical professionals and patients and provides much-needed system transparency” (Turri, 2022).

One method that has gained increased importance in the field of XAI, is the Knowledge Graph. Knowledge graphs (KGs) organize data from multiple sources, capture information about entities of interest in a given domain or task (like people, places or events), and forge connections between them. In data science and AI, knowledge graphs are commonly used to add context and depth to other, more data-driven AI techniques such as machine learning; and serve as bridges between humans and systems, such as generating human-readable explanations. (Source: The Alan Turing Institute)

With the outbreak of the covid pandemic, the World Health Organization has signaled that it perceives AI as an important technology to tackle not just the current covid-related challenges but also for building next-generation epidemic preparedness. Thus, the focus in this part is on using digital trace data (cell phone data, web searches, social media interactions or Wikipedia entries) for studying and preventing disease outbreaks. This is part of a field that has increased in importance in the last few years – digital epidemiology. Epidemiology aims at identifying the distribution, incidence, and etiology of human diseases to improve the understanding of the causes of diseases and to prevent their spread. Traditionally, studies have been based on data collected primarily in hospitals through health personnel. However, in recent years new data sources have become available for studying and preventing disease outbreaks – our interactions stored digitally. (Source: Salathé et al., 2012)

Methods for this use case involve traditional machine learning methods in combination with NLP, social network analysis and knowledge graphs.

Responsible AI

Increasing trust in decisions made by AI applications in healthcare depends not only on accurate decision support systems but also on tackling challenges concerning AI ethical and legal frameworks. AI Ethics is a set of guidelines that advise on the design and outcomes of artificial intelligence (IBM, 2022). It is a major topic not only in academia, but also for tech companies such as Google, Facebook and Microsoft. Reason for this are the sensitive decisions for which AI systems are being used, especially in healthcare. Experts warn of using such systems without clearly defined frameworks, policies and guidelines which will avoid bias, privacy and security issues and will overall decrease the risk of a relying on faulty AI system. Major topics in AI ethics in healthcare are informed consent to use, safety and transparency, liability, algorithmic fairness and biases, and data privacy (Gerke et al., 2020).

Data governance offers solutions to the mentioned concerns. The objectives of data governance are two-fold. First, limiting access to patients’ data to ensure privacy and security, and second, securely sharing patients’ data between systems for integration and decision-making purposes (Alofaysan et al., 2014). Governance of data in any domain, including healthcare, involves topics such as people, processes, policy, and technology.

Considering the recent Covid pandemic and the many questionable decisions around mandatory vaccines, there are several opportunities for future research, namely:

- The role of social media in spreading vaccine misinformation and its consequences.

- Privacy awareness of vaccinated people – are they properly informed regarding collection and processing of their personal information?

- Fraud detection and prevention.

Methods used for this use case are primarily qualitative studies or a combination of empirical research and machine learning and NLP.

References:

Ram et al., 2017. https://arxiv.org/ftp/arxiv/papers/1801/1801.03604.pdf

Salathé et al., 2012. https://journals.plos.org/ploscompbiol/article?id=10.1371/journal.pcbi.1002616

Turri, 2022. https://insights.sei.cmu.edu/blog/what-is-explainable-ai/

Hagras, 2018. https://ieeexplore.ieee.org/abstract/document/8481251

IBM, 2022. https://www.ibm.com/cloud/learn/ai-ethics

Gerke et al., 2020. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC7332220/

Alofaysan et al., 2014. https://www.scitepress.org/papers/2014/47381/47381.pdf